Uniquely Human: Reaching Our Full Potential

Think of the smartest person you know. What makes them more intelligent than the other people in your life? Do they have a photographic memory? Are they unbelievably creative? Can they perform complex calculations in their head? What makes that person so unique?

Each human brain has roughly the same number of neurons. Smart people do not have more computing power. Humans missing large chunks of their brains or an entire cerebellum can hold down jobs. Intelligence is not a function of “hardware.” It is determined by “software.” It depends on how the 86 billion neurons in your brain are configured.

In Artificially Human, I wrote that the primary challenge with forecasting the impact of automation is our lack of understanding of the brain. We can make reasonable predictions about technologies like AI using assumptions like Moore’s Law. Our models are frequently off by a decade or two, but that’s a blink of an eye in human history. In contrast, expert predictions for when we will achieve human-level intelligence (AGI) range from “now” to “never.”

Source: Survey distributed to attendees of the Artificial General Intelligence 2009 (AGI-09) conference

Few experts offering predictions about AGI offer a compelling definition of human intelligence. I don’t understand how you can make forecasts without a coherent model for the intelligence you are seeking to replicate.

Let me illustrate my point with an analogy. You pose the following problem to two experts: “A train leaves Chicago traveling 50 miles per hour. When will it reach its destination?” The first expert confidently answers, “2:45 pm.” The second expert claims, “5:30 pm is a more accurate estimate.” All the while, neither expert has bothered to ask for the destination. Welcome to the current state of AI discourse.

In this article, I describe what I see as the “destination” for AI and explain why the destination shifts over time. I then make a case for “uniqueness” as the final frontier of human intelligence. Finally, I reflect on what all this could mean for the future.

The Fourth Dimension

When I asked you to name the smartest person you know, did you struggle to compare different types of intelligence? Is a scientist more intelligent than a philosopher? Is an artist more intelligent than an athlete? There are exceptional humans in every field. Any model for intelligence needs to be flexible enough to apply generally.

I’ve struggled to find a satisfying model. The best I’ve discovered so far are those described by Jeff Hawkins in his books, On Intelligence and A Thousand Brains. Hawkins’ theories are compelling because they model the brain as a complete system and explain intelligence using neuroscience concepts. Unfortunately, Hawkins mostly punts on comparing human and machine intelligence.

The model that I use for human intelligence is simple. It consists of four dimensions, three of which relate to individual intelligence (your smartest friend) and one to our collective intelligence. I use this model because it’s flexible, holistic, and grounded in what we know about the brain.

Here are the three dimensions that I use for individual intelligence:

Fidelity: Your brain does not encode information perfectly. It stores patterns. For example, an apple and an orange are fruits that grow on trees. Can you recall the precise differences between an apple tree and an orange tree from memory? Probably not. There is little point in storing this information in detail unless you work in an orchard or study botany. In my model of the brain, fidelity is a proxy for knowledge and expertise. It’s the degree to which the patterns encoded in your brain allow you to recall information with high precision and accuracy. If the smartest person you know is an expert in a domain, that person excels in the fidelity dimension.

Range: If fidelity is knowledge and expertise, range is wisdom and creativity. The range dimension represents the breadth of patterns encoded in your brain and the extent to which those patterns are connected. I borrow the term from David Epstein’s book of the same name. In Range, Epstein writes, “The more contexts in which something is learned, the more the learner creates abstract models, and the less they rely on any particular example.” This dimension allows you to reason through analogy and find new patterns at the intersection of existing domains. If the smartest person you know often uncovers insights others miss or is exceptionally creative, I would say that person excels in the range dimension.

Efficiency: Generating action potentials and transmitting signals across neurons takes time. The speed with which you process data is a function of the pathways used. Learning a new skill is difficult initially because your brain processes new data through existing pathways. This is like taking the long way home from work. You arrive at your destination, but it takes more time. As you practice, more efficient neural pathways form. Eventually, pathways can become so efficient that the upper layers of your neocortex are no longer involved. You can do the task without consciously thinking about it. We don’t always consider people who excel in the efficiency dimension as “smart,” but we should. For example, professional athletes are incredibly efficient.

Fidelity, Range, and Efficiency are the dimensions I use to assess the intelligence of individual humans. A talented astrophysicist is not more intelligent than a bestselling fiction author or Formula 1 racing driver. Each excels in a different dimension.

Hebb’s rule states that “any two cells or systems of cells that are repeatedly active at the same time will tend to become ‘associated,’ so that activity in one facilitates activity in the other.” Put simply, neurons that fire together wire together. Hebb’s rule is the foundation of modern neuroscience.

I find this model appealing because it’s consistent with Hebb’s rule. If you are exposed to detailed information in a narrow domain, your neurons will encode that information with high fidelity. If you’re exposed to information from many domains, your neurons will encode a range of abstract patterns. If you’re repeatedly exposed to the same information, your neural pathways will be simple and highly efficient.

Each of us exhibits some combination of fidelity, range, and efficiency. It’s certainly the case that some neural pathways underpinning intelligence are shaped by genetics and biological factors (e.g., nutrition). However, I suspect that most of what we consider human intelligence is a function of training data. Specifically, it’s a function of what we see, hear, touch, smell, and taste when our brains are most plastic.

Thus far, I’ve outlined dimensions you could use to describe your smart friend. However, humans are social animals. The smartest person among us does not gate our intelligence. We can share knowledge, wisdom, and skills. What matters is cumulative intelligence. That brings me to the final dimension:

Uniqueness represents the breadth of patterns stored across all human brains. Uniqueness differs from range, which measures the breadth of patterns stored in a single brain. I think of uniqueness as the inverse of redundancy. For example, over a billion humans have neural pathways dedicated to driving automobiles. This redundancy limits our cumulative intelligence. As self-driving cars enter the market, each of us will have more capacity to learn new skills. Driving is a skill that benefits us individually but drains our collective intelligence.

In my model, human intelligence is maximized when each person stores unique patterns and optimizes along one or more dimensions of individual intelligence (fidelity, range, efficiency). Achieving this goal is impossible today. The world is still powered by human labor. We need lots of humans with similar skills. Fortunately, artificial intelligence may help us overcome this limitation.

Automating Redundancy

Machine intelligence and human intelligence differ in many ways — an artificial neural network is not the same as a human brain. That said, the dimensions in my model can also be applied to machines.

Fidelity: Machines can store data with near-perfect fidelity. They don’t need to save space by storing patterns. A database stores information exactly as you enter it. I find criticisms of large language model (LLM) hallucinations pointless. LLMs excel in the dimension of range, not fidelity. If you want fidelity, use a database.

Range: What makes LLMs powerful is the breadth of patterns stored in their neural networks. GPT-3 contained 175 billion parameters and was trained on about 45 billion terabytes of text data. That was enough to generate human-level natural language across multiple domains. The number of parameters in GPT-4 has yet to be announced, but we know the model was also trained on image data. The range of patterns stored in AI models is increasing rapidly. We are far from reaching the limits of artificial intelligence’s problem-solving and creative abilities.

Efficiency: There are plenty of tasks humans can do that machines can’t. However, I struggle to think of a situation where machines are slower than humans on a like-for-like basis. Try multiplying a list of numbers faster than a spreadsheet or writing a bedtime story more quickly than an LLM. No machine is perfectly efficient, but artificial intelligence seems to have already surpassed human intelligence along this dimension.

In Artificially Human, I defined automation as “the act of replacing human labor with machine labor for an ever-increasing number of tasks.” It’s often difficult to see automation in action. Institutions are a complicated mess of human and machine labor. It’s challenging to see where human work ends and machine work begins.

To appreciate how automation occurs, picture a company powered entirely by human labor. Let’s make it a services business like a bank. There are tools like pencils and paper but no computers. How would the company be organized?

Like most companies, the structure would look like a pyramid. Employees at the pyramid’s base would be organized into teams with similar skills. For example, there would be a team of accounts payable (AP) clerks processing invoices. These workers excel in the efficiency dimension. AP clerks can process invoices faster and more accurately than the average human.

As we move up the pyramid, we find supervisors and managers. These workers are more experienced than entry-level employees and excel in the fidelity dimension. When an AP clerk has a question about an invoice, they ask a supervisor for help. The supervisor’s brain has more neural pathways related to invoice processing than the average employee.

At the top of the pyramid, we find multiple layers of senior managers and executives. These individuals excel in the range dimension. They piece together what happens in different parts of the organization (e.g., accounts payable, procurement, accounting). Most companies require several layers at this level to deal with the complexity of running a business.

Now, let’s automate the company by replacing humans with machines. At the base layer of the pyramid, we need simple machines that transfer and process data. For example, an application programming interface (API) can transfer data from suppliers to the company. These machines, like their human counterparts, excel in the efficiency dimension.

The next layer of the pyramid must keep track of the data gathered by the base layer. This is the institutional memory of the organization. We don’t often think of this role in human-powered organizations. However, the reason supervisors can answer questions is that they have more knowledge and expertise than the average worker. In the machine-powered firm, data stored at this level can be recalled with perfect fidelity and is accessible to all other machines in the company.

Finally, we need machines to run the business. This is where we hit a wall today. For all the incredible things GPT-4 can do, it doesn’t yet have the skills to manage a complex enterprise. That said, we see early signs that managerial tasks are within reach of machines (e.g., analyzing data using code interpreter for GPT-4). Humans are still the benchmark for range, but machines are gaining ground quickly.

Intelligence Hierarchy — Humans vs. Machines

I dramatically oversimplified the structure of companies and what it takes to run them. Supervisors are more than a data repository. They manage employees, troubleshoot issues, and collaborate with others. That said, many of these tasks exist because firms are powered by human labor. Most go away if a company runs entirely on machine labor.

Where does this leave us? If machines are on a path to outcompete humans on all three dimensions of individual intelligence, isn’t this the end of the road for human workers? Perhaps, but there is one more dimension we must consider.

Rise of Uniqueness

Most large companies have automated high-volume, routine tasks like invoice processing. There are still humans involved, but their work has changed. Instead of entering data, the workers manage exceptions and support the machines (e.g., software engineers). What was previously a homogenous team of AP clerks has become a cross-functional team with unique skills.

Artificial intelligence is highly scalable. Once we have a machine that can perform a task better and more cheaply than humans, there is little stopping the machine from taking on all human work of that type.

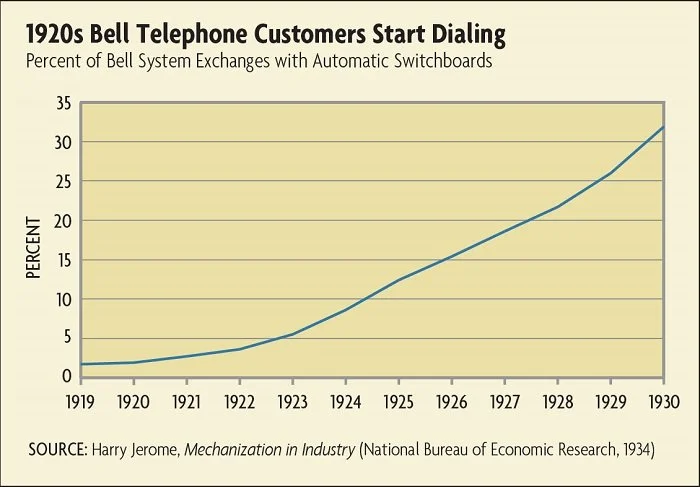

Looking back to the early 1900s, switchboard operators played an integral role in society. They were the “humans-in-the-loop” holding together an expanding telephony network. These workers were highly efficient but no match for the technology that would eventually replace them.

Today, there are about three million call center agents in the United States alone. Most serve as human-in-the-loop connections between third parties and a company’s IT systems. I expect these roles to go the way of the switchboard operator as digital channel adoption and chatbot capabilities advance.

Machines may be highly scalable, but they’re costly to build. Humans must document patterns (programming) or curate large datasets (machine learning). It doesn’t matter if the machine you’re building will do a task once or a billion times. The training process (and costs) are similar.

The implication is that unique tasks are less feasible to automate. A common belief is that machines will do the simple stuff and humans will do the complex stuff. That’s inconsistent with the model of intelligence that I’ve described. I believe machines will do the common stuff, and humans will do the unique stuff.

We should stop thinking about intelligence at an individual level. Your smartest friend might be an incredible human but will struggle to keep pace with the machines. Instead, we should focus on our collective intelligence. We should maximize uniqueness.

Larry Tesler said, “Intelligence is whatever machines haven’t done yet.” I display that quote prominently on my website. Tesler is making a case for uniqueness whether he intended to or not. Human intelligence is a moving target, and uniqueness is the dimension along which it progresses.

Why this Matters

I began this article by criticizing experts who forecast the future of AI without providing a model for human intelligence. I have described my model and made my case for uniqueness as the best measure of human intelligence. So what? Why does this matter?

In Artificially Human, I claimed, “Machines need not achieve human-level intelligence to replicate the capabilities of humanity.” My point is that most of the intelligence we require to run society is redundant. The work of call center agents will be automated long before a single machine can compete with a single human.

Where will the displaced call center agents go? Some will become content creators. Others will start small businesses. Most will transition to the next wave of human-in-the-loop service jobs. This process will repeat until everyone has carved out a unique role.

I still see more redundancy than uniqueness in society. The fact we can explain what we do for a living with simple job titles (e.g., sales clerk, truck driver, investment banker) is a sign of redundancy. We often focus on our differences, but we’re usually more alike than we care to admit.

It’s time to think differently about human intelligence. We can’t continue to live in a world constrained by human labor. A world that primarily values fidelity, range, and efficiency. A world where being the best is far more important than being the only. That’s a recipe for fear and anxiety.

I believe we’re a long way from artificial intelligence overtaking human intelligence. How we organize companies, set public policy, and educate our children reflects a society obsessed with individual intelligence. We have not begun to test the limits of uniqueness.

Machines will one day achieve artificial general intelligence. When that day comes, replacing human workers with machines may be feasible. Until then, I am excited to see how much further we can push the bounds of human intelligence.

Imagine a world where you are free to pursue your unique passions. None of us grew up wanting to be an AP clerk. The work we do today is the result of running a complex society on human labor. Machines may be coming for our jobs, but what if that gives us more space to be ourselves?