Misunderstood: AI’s Adoption Problem

Lately, I’ve been wondering: Am I the crazy one when it comes to AI?

Public discourse swings wildly between extremes. Is AI overhyped or a civilization-altering technology? Are foundation models intelligent or nothing more than power-hungry stochastic parrots? Is artificial general intelligence (AGI) close, or is the entire concept a pipedream?

Most people seem to come down somewhere in the middle. AI is impressive but probably overhyped. Models are useful but not intelligent like us. AGI is possible, but it’s still years away.

These debates sound ridiculous to me. AI is already reshaping society, and foundation models clearly exhibit intelligence. AGI? That’s a tougher question, but it has little to do with AI. Neuroscience hasn’t cracked the mysteries of the brain, so what exactly are we measuring against?

Unlike virtual reality or cryptocurrency, the more I use AI, the more excited I am about its future. The technology is here, and it works. I don’t need to imagine an avatar-filled metaverse or realignment of the global financial system. All I have to do is open my computer and watch the magic unfold before my eyes.

Maybe I’m the crazy one. Perhaps I’m caught in an AI echo chamber, blind to its limitations. But what if the real issue isn’t AI’s capabilities but our hesitation to embrace them? The gap between what AI can do and how most people use it is growing at an alarming rate.

In short, AI has an adoption problem.

Waiting Patiently

In 2014, I was working with a client on an outsourcing and offshoring program. The objective was to take a bunch of work done by expensive humans and give it to less costly humans. All I could think was, “Why are humans doing this work in the first place?”

That’s when I came across a video by CGP Grey, “Humans Need Not Apply,” that put my disjointed thoughts into a narrative. I dusted off whatever STEM skills I had remaining from my engineering degree and dug in.

It’s been more than a decade since CGP Grey published that video. On one hand, we’ve seen unbelievable progress in artificial intelligence and robotics. On the other hand, daily life hasn’t changed much.

I remember having conversations with a McKinsey Global Institute (MGI) partner in 2015. I was convinced automation and AI were coming, and McKinsey needed to get ahead of the curve. I even went so far as to stake my partnership election on it.

My colleague cautioned that it would take decades for society to fully adopt AI. His team projected that automation technologies would proliferate around 2025. OpenAI released ChatGPT on November 30, 2022, which put us a couple of years ahead of MGI’s “early scenario.”

Source: McKinsey Global Institute

What I found more intriguing were the adoption curves. MGI projected 50% adoption by 2037 in the “early scenario.” That seemed ridiculous. Wouldn’t a massive uptick in capabilities lead to rapid adoption?

The answer seems to be “no.” ChatGPT has 300 million weekly active users but only 10 million paid subscribers. To put that into perspective, less than 0.3% of the global workforce values ChatGPT enough to pony up $20 per month. I haven’t seen estimates for the $200 per month ChatGPT Pro subscription, but there can’t be many of us.

Are adoption rates higher if you include Google, Anthropic, and other AI companies? Yes. Are software engineers feverishly building AI into every application imaginable? Also, yes. Have we automated 15 percent of the work in the 2016 baseline as projected in the “early scenario?” Not even close — and we had a two-year head start.

My colleague was right. Adoption is painfully slow when compared to technological innovation. Let’s explore why.

Who Needs Customers?

The adoption problem begins with the AI companies themselves. OpenAI released GPT-3 in June of 2020. The model was a huge step forward for AI capabilities — and most people barely noticed.

OpenAI’s most significant contribution to AI wasn’t GPT-3. It was a simple website launched two years later, ChatGPT. Within two months, the site had more than 100 million users. ChatGPT provided the average person with a way to finally access advanced AI.

ChatGPT (December 2022)

Unfortunately, user interfaces (UI) aren’t innovating at the same rate as AI models. OpenAI added new features to ChatGPT over the past two years, but the website hasn’t changed much. Two of the most impressive features, advanced voice mode and deep research, disappear into the background of the current UI.

ChatGPT (February 2025)

I’m no designer, but I’ve watched plenty of people use ChatGPT. Most treat it like a search engine, which makes sense — the UI looks like Google. Few users have any idea what the models are capable of.

If AI companies want to attract and retain billions of customers, they must put more effort into UI design. Chat interfaces can’t be the final form — and I’m pretty sure the solution isn’t an 800 number in the year 2025.

AI companies also need to stop letting engineers name the models. What the heck is Gemini 1.5 Flash? Why isn’t there an o2 model between o1 and o3? Is an Opus better or worse than a Haiku? I know the answers to those questions, but I shouldn’t.

Source: Company Websites

If o3-mini-high is excellent for coding, call it the “coding model.” When o5-mini-high comes along, keep calling it the “coding model.” The current branding is fine for AI nerds like me. It’s not okay for the mass market.

Words matter. Brands matter. Toyota doesn’t change the name of the Camry every time they release a new generation. If AI companies made their products less confusing, users might embrace them more quickly.

To be fair, most foundation model providers don’t see themselves as consumer software companies. Their priority is creating enterprise-grade models, and their application programming interfaces (APIs) tend to be well-crafted, well-documented, and well-supported.

Unfortunately, that strategy requires enterprises to innovate. Their track record hasn’t been stellar.

Pillage, then Build

In Artificially Human, I downplay the risk of enterprises replacing humans with AI. Machines work differently than people, and it’s not worth the effort for most businesses to retool. It’s easier to start from scratch.

This concept is at the heart of the Innovator’s Dilemma. New entrants pick off low-value customers with new business models, eventually moving up the value chain. By the time incumbents realize what’s happening, it’s too late.

The number of AI-powered businesses is exploding. Venture funding was relatively flat from 2023 to 2024, but funding for AI businesses lept more than 80 percent to $101 billion. Raising money for a new company is increasingly difficult without an AI story.

Source: Crunchbase

Shouldn’t that be a boon for AI adoption? Not necessarily. Three-quarters of those venture dollars flowed into business-to-business (B2B) companies. Ironically, their primary customers are the same incumbents that AI is poised to eventually displace.

Source: CBINSIGHTS

When asked why he robbed banks, Willie Sutton replied, “Because that’s where the money is.” Venture capital isn’t building the next generation of businesses — yet. It’s funding B2B companies that know how to extract cash from legacy organizations. Why? That’s where the money is.

Legacy companies don’t need to go along for the ride. They could build and buy AI tools to increase the productivity of their existing workforces. If they eke out a few points of productivity each year, perhaps they can keep the AI disruptors at bay — or at least generate enough cash to buy them.

Unfortunately, most executives are so enthralled with reinventing their businesses that they ignore the “empower your people” path. They cite concerns over hallucinations, security, and other reasons for restricting employee access. AI is somehow an existential threat and also too risky to adopt at scale.

Employees exacerbate management’s concerns by using whatever AI tools they have available. You don’t want to know how many sensitive documents employees uploaded to DeepSeek over the past few weeks. These behaviors reinforce management’s distrust, further delaying AI adoption.

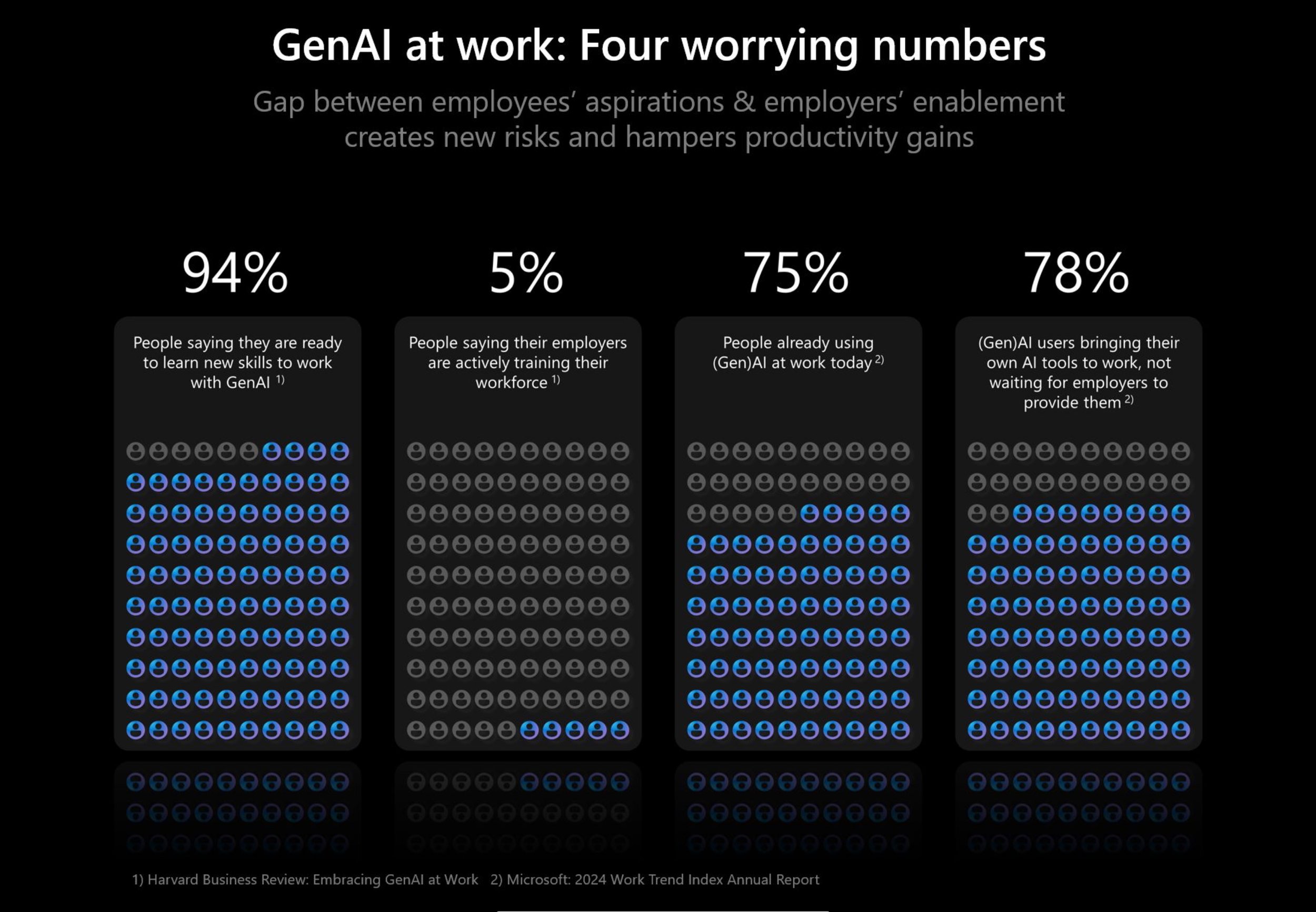

Source: Harvard Business Review, Microsoft (graphic by McKinsey)

At some point, the disruptors will overtake the incumbents. Legacy CEOs will blame their IT departments, technology vendors, and consultants for failing to reinvent their businesses. All the while, the solution was right under their noses — empowering their people with AI.

This brings us to the final barrier to AI adoption: human stubbornness.

Less Talking, More Doing

People have lots of opinions about AI. Most of those opinions aren’t grounded in experience. It reminds me of the bar scene in Good Will Hunting where Matt Damon says, “Were you gonna plagiarize the whole thing for us — you have any thoughts of — of your own on this matter?” I hear the same anecdotes over and over again.

I’m not the foremost authority on AI, but my perspectives are grounded in practice. I’ve built two AI-powered applications: Paper-to-Podcast and Purposefund. I use models from all the major players, including OpenAI, Google, Meta, Anthropic, Mistral, and DeepSeek. I’ve incorporated AI into most of my daily workflows — I even used OpenAI’s Deep Research to gather sources for this article.

VC Funding Report from OpenAI’s Deep Research

I’m (mostly) retired and only work a couple of hours a day. AI is what makes my lifestyle possible. It feels like I’m living in the future, where machines do most of the work, and I can enjoy my leisure time. It’s a cushy gig.

Why don’t more people venture down this path? Habits die hard, and AI takes more than it gives in the early days. That’s especially true if you want to build your own tools. Not everybody can spend their weekends learning Python.

You’d think my former consulting firm would be a rapid adopter of AI — smart, ambitious people who love technology. But most of the time, I felt like I was on an island. Partners wanted to sprinkle buzzwords into client decks, but the enthusiasm vanished when it came time to learn about AI.

Mastery comes from practice, not just theory. You don’t earn a driver’s license by acing a written test; you earn it by spending hours behind the wheel. Yet, many people treat AI as an abstract concept rather than a practical skill. They’ll invest 40+ hours learning to drive but won’t spend a fraction of that time mastering a technology that could shape their future.

Human stubbornness is the biggest roadblock to AI adoption — we cling to the past, even when the future is knocking at our door.

The Bear Case

AI’s future is bright. The models we already have could fuel a decade of progress. A financial bubble might come, but another AI winter won’t follow. The bottleneck right now isn’t technology — it’s adoption.

We can’t make AI companies care more about design. We can’t force old-school businesses to trust their people. We can’t rush AI startups into scaling before they’re ready. Much is out of our control.

All we can do is conquer our individual adoption challenges. I don’t know how this will all unfold, but one thing is certain: those who embrace AI will have the advantage. You don’t need to outrun the bear — just the other humans.